Who’s really to blame for CMS performance challenges?

Despite the title, this post isn’t about finger-pointing. Rather, I want to take some time to address the fact that performance is a widely recognized issue for sites that use a content management system. And I want to scratch the surface of these questions: to what degree is this a vendor problem versus a user problem, and what can site owners do to fix it?

A couple of weeks back, I made this prediction for 2014:

From WordPress to enterprise CMSes, we all know that content management systems cause performance hits that are hard — or downright impossible — to mitigate. This year, I think we’re going to see more CMS vendors realize that offering a product that enables better performance is a serious competitive differentiator.

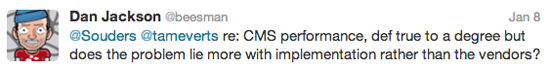

Dan Jackson replied with a good question on Twitter:

The answer is: a bit of both. But the good news is that these problems are fixable.

First, some back story…

Roughly 33% of the top 10 million websites in the world use some kind of CMS. WordPress is by far the dominant player in the CMS space, with 60% market share. (I’ll spare you the math: this means that roughly 21% of websites use WordPress.) Lagging far behind are the other market leaders, including Joomla, Drupal, Blogger, and Magento, which collectively have about 6% market share. And then there are a few dozen more vendors bringing up the rear. [source]

There are three main reasons why CMSes were created and why they’re so popular:

- To segregate content from design and code, so anyone can make site updates.

- To create approval-based publishing processes for managers.

- To create audit trails for corporate lawyers.

Reason #1 is the real issue up for discussion today: non-technical people creating and adding content, AKA “when good content creators go bad”.

These well-intentioned folks don’t know about performance, and have no idea that their oversized graphics, animated gifs, and blinking rainbow-coloured headers can easily exceed 1 or 2 MB each. And they don’t understand that the text they copied and pasted directly from a Word doc is full of useless, bloated markup. And the CMSes themselves don’t warn users about these performance issues, because nobody has ever asked them to develop this as a feature. And of course no one asks CMSes to develop this feature because, as I pointed out at the top of this paragraph, the average content creator doesn’t understand performance. It’s a cycle perpetuated by lack of information.

(Note: The paragraph above might sound a bit critical, which isn’t my intent. We can’t expect the average person to care about something they don’t know about. This is why it’s the job of people inside the performance community to keep evangelizing performance outside our community.)

1. What kinds of performance problems do CMSes cause?

At the front end, a CMS by itself doesn’t really cause any problems. Almost all of the “problems” are caused by content editors (who use rich text editors and have no idea what the content they are copying and pasting from another website contains) and by designers (who make nice-looking design elements with no lens for performance).

At the back end, however, some CMSes do create performance challenges. In this post for the 2011 Performance Calendar, Pat Meenan talked about the fact that, while the back end is only responsible for 10-20% of response time, when it goes wrong, it can really go wrong. Among other things, Pat shared his finding that “It is not uncommon to see 8-20s back-end times from virtually all of the different CMS systems.” Ouch. This problem is largely attributed to the fact that hosting for these (admittedly not top-tier) sites can vary hugely — with shared hosting being the most common environment — giving site owners zero visibility into the performance of the system they’re running on.

2. What kinds of performance fixes do CMSes prevent?

CMSes do make some optimizations difficult, depending on how extensible and open they are. Many CMS vendors make it very difficult to manipulate core objects such as images. Some make it difficult to edit and access JavaScript and CSS files, but this is changing. Many don’t have extensible editors or frameworks to look at the wildly unoptimized resources that editors and designers are putting in and warning people that, from a performance perspective, these resources suck.

3. Is this a problem with the CMS itself, or with how the CMS is implemented?

Both. Some CMSes are, frankly, not good. But most, to use a favorite analogy from my Strangeloop roots, are like trusty old firearms. Guns don’t kill people — people kill people. As we’ve already discussed here, the users of the system are a major challenge.

Another challenge is the fact that most in-house developers don’t want to touch a third-party CMS to make it more performance-friendly. There’s a perceived cultural bias that any developer with real chops shouldn’t be caught dead working on a CMS because it’s not serious coding.

4. So what’s the solution?

In an ideal world, a CMS would warn content creators about performance-hurting practices. To be fair to CMS vendors, this is difficult to codify; however, I feel that, given the increasing emergence of performance as a feature, vendors should attempt to aim for low-hanging fruit such as grossly unoptimized images and hidden markup.

It would also be great if vendors could give site owners overall visibility into the performance of their pages, specifically at the back end. I know so many owners of smaller sites who know that their pages are slow but, because they have no visibility, don’t know where to begin to tackle the problem. And hey, so long as we’re blue-skying, how about addressing the back-end response-time issue that Pat identified a couple of years ago?

My prediction/dream is that CMS vendors will step up to make performance a feature. Until then, if you’re using a content management system, you need to have a good team dedicated to looking at your site metrics on a regular basis (e.g. using a tool like WebPagetest). And you know what? An excellent FEO solution would be great, too. 😉